Hello, I'm Has

I am a passionate technologist and a consultant by trade. I work as the executive director at vNEXT Solutions, based in Melbourne, Australia.

In my work, I focus on Data, IoT, AI, and DevOps. I have successfully delivered many secure, scalable, and award-winning projects for variety of fields including medical, industrial, financial and environmental sectors.

I am also a Microsoft Azure MVP (Most Valuable Professional) and a regular organiser and speaker at local and international conferences. Moreover, I am a board member on the Committee for Global AI.

You can follow me on twitter, Linkedin, or get in touch.

Here's a list of my recent posts. Hope you enjoy it :)

Microsoft Build 2019 - Updates and demos

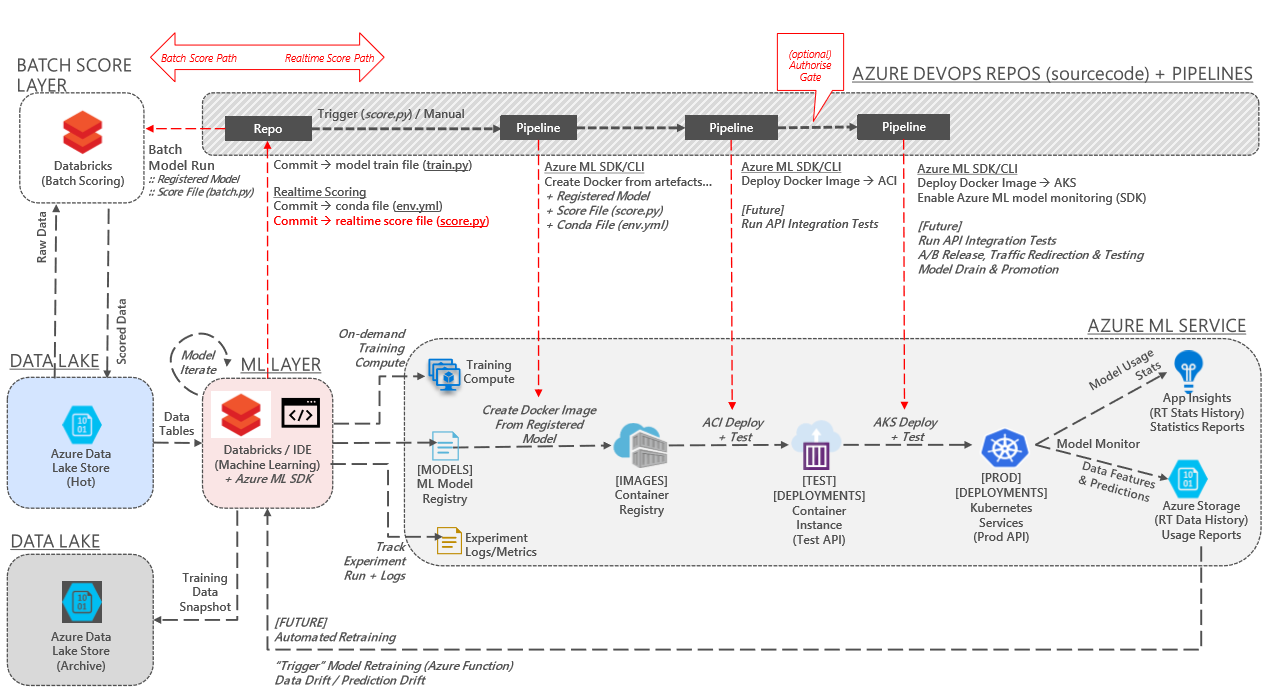

MLOps on Azure by Rolf Tesmer - Melbourne Azure Meetup - July 2019

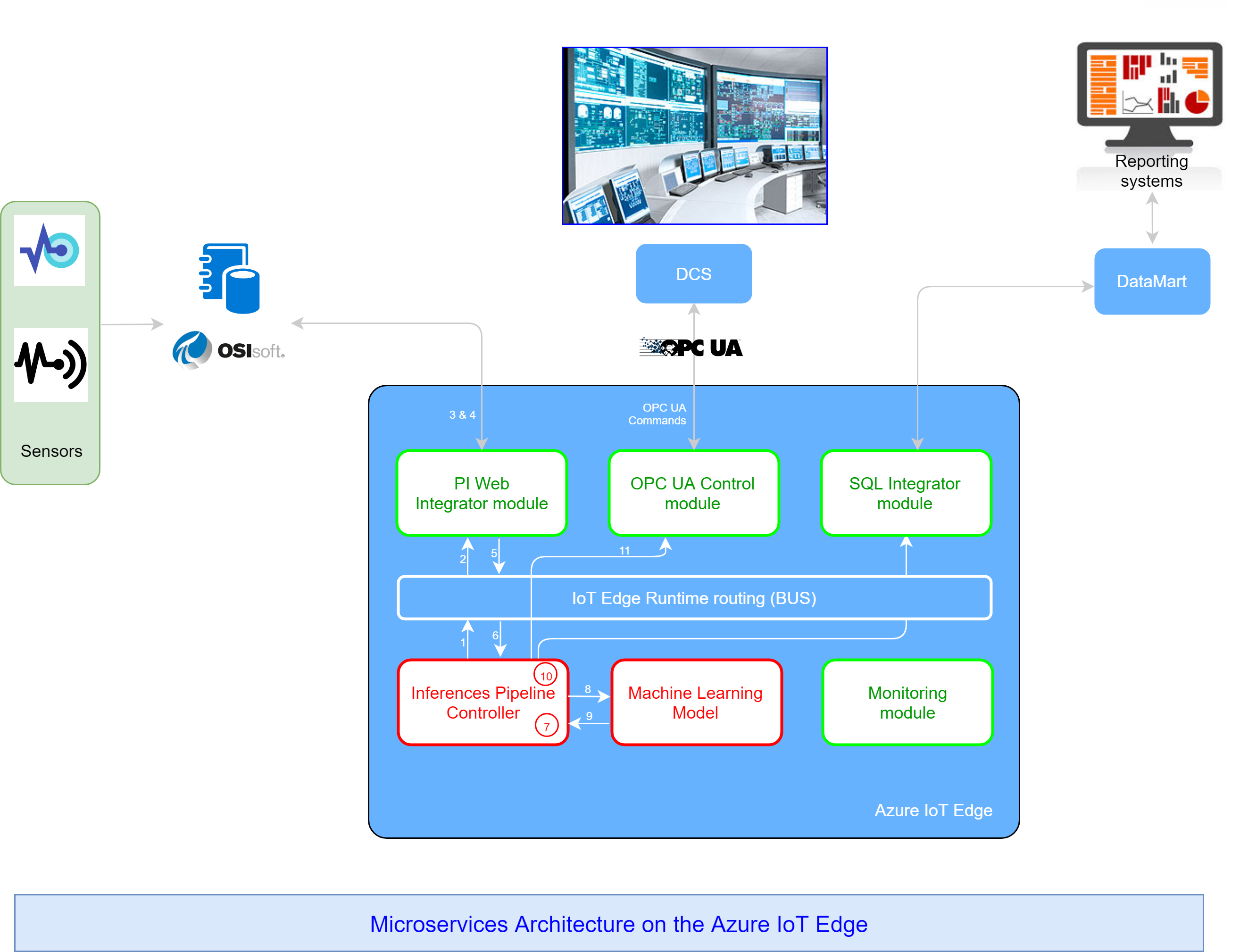

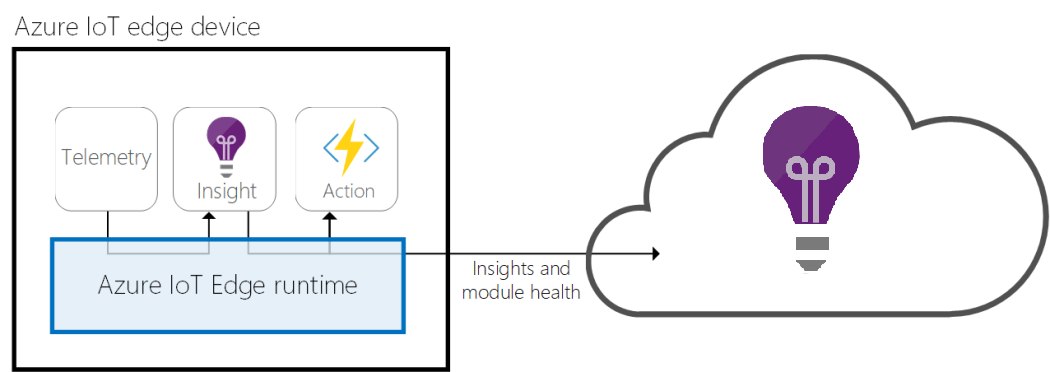

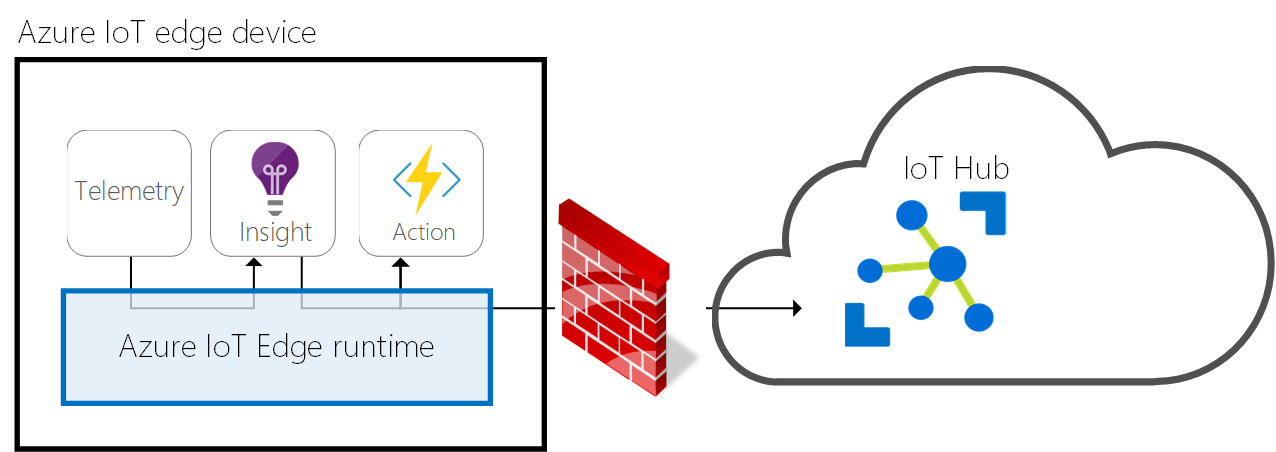

AI and IoT Integration on the Edge - April 2019

AI & IoT on the Edge - Global Integration Bootcamp 2019

Azure Monthly News Summary - November 2018

Azure Monthly News - November 2018 (from Melbourne Azure Meetup)

Azure Monthly News Summary - September 2018

Azure Monthly News - Sept 2018 (from Melbourne Azure Meetup)

I am Pledging 5% of my salary to charities for the rest of my life

TL;DR

- What? I am pledging 5% of my salary to charities for the rest of my life.

- Where does the money go? The money is donated to charities recommended by GiveWell. GiveWell is a non-profit organisataion dedicated to assessing charities and finding what’s the most effective way to spend donations and aid.

- Why am I doing it? The short answer is because I feel it’s the right thing to do. Slashing 5% of my salary would not make a major impact on my quality of life. It will certainly make an impact on how long it will take me to pay off my mortgage. But the impact I can make to the poorest people with this money is much much more to how it impacts me.

- So what? Why am I sharing this? Well I am hoping by sharing this I would get you to think about doing the same. You don’t have to donate 5% of your salary, you can start by donating 3%, 2% or even just 1%.

The longer version

If you are still reading, then you are interested in knowing the whole story, and I am glad you are :)

Congrats, you are a Solution Architect, now what?

Last week, I was fortunate enough to speak at DDDPerth, which was a great conference and awesome and engaged audience. There were about ~ 350 people attending, with the amazing help of the organisers and volunteers. Here is the link to my slides, and I would love to hear your feedback:

Using F#, Fake, and VSTS for a Consistent, Repeatable, and Dependable DevOps Process

I have been using F#, Fake anf VisualStudio Online for DevOps for a while and I got to say that I really love it. I Spoke about this a few weeks back at DDD Melbourne (DDDByNight) and recently I presented the same topic at NDC Sydney. Here I share the slides and the demo code and I hope you find it uesful.

Using Confluence as Architecture/Documentation Repository

I’m starting on a new project so I need to document the decisions that I am making in the design and processes. After evaluating a number of platforms or methods, I have concluded that using Confluence was a good fit, so here I summarise my reasons for this, I hope you find it useful:

The story of building Zen Thermostat IoT & Mobile Apps

Last week, I gave a talk in Melbourne Mobile meetup about the story of building the Zen Thermostat IoT and Mobile apps. Here are the slides for the use of everyone who is interested 🙂

Inter-App Communications in Xamarin.iOS and Why OpenUrl Freezes for 10+ sec

Inter-App Communications

A Journey of Hunting Memory leaks in Xamarin

My client was reporting performance issues with an existing app that was developed internally, and I had to find the problems. So, here is my journey on finding the issues and resolving them. The resolution was a reduction of the memory usage to 1/4 of what it was and usage was stabilised to this level (1/4). I am hopeful that this blog post can help you too in refining your app and pro-actively resolving any performance issues.

PIN Number Password Fields for Windows Xaml

In Windows Xaml world, a bad design decision was made to have the passwordBox and the TextBox different, meaning that the PasswordBox does not inherit common properties from the TextBox. As a consequence, you cannot do many of the things that you normally do with a textbox like customising the appearance of the password textbox (say you want to make the text centre-aligned, or you want to bind the number key pad instead of the alpha keyboard). I had this exact requirement 2 weeks ago and I had to solve it, so this blog talks about the approach I took to make this happen.

SQLite.Net.Cipher: Secure your data on all mobile platforms seamlessly and effortlessly

SQLite database have become the first choice for storing data on mobile devices. SQLite databases are just files that are stored on the file system. Other apps, or processes can read/write data to this database file. This is true for almost all platforms, you could root/jailbreak the device and get the database file to do with it whatever you like. That’s why it is very important that you start looking into securing your data as much as possible.

Azure ApplicationsInsights for Xamarin iOS

Azure ApplicationInsights (AI) is a great instrumentation tool that can help you learn about how your application is doing during run-time. It is currently in Preview mode, so bear that in mind when developing production ready apps. It gives you the ability to log lots of different kinds of information like tracing, page views, custom events, metrics and more.

What You need to know before you start developing Windows Phone apps

](https://i2.wp.com/www.hasaltaiar.com.au/wp-content/uploads/2015/07/windowsPhone.jpg)

](https://i2.wp.com/www.hasaltaiar.com.au/wp-content/uploads/2015/07/windowsPhone.jpg)

Let’s Hack It: Securing data on the mobile, the what, why, and how

](https://i2.wp.com/www.hasaltaiar.com.au/wp-content/uploads/2015/05/coles-solution.png)

](https://i2.wp.com/www.hasaltaiar.com.au/wp-content/uploads/2015/05/coles-solution.png)

Reachability.Net: A unified API for reachability (network connectivity) on Xamarin Android and iOS

Do you need to check for internet connection on your mobile app? Don’t we all do it often and on many platforms (and for almost all apps)?

I found myself implementing it on iOS and on Android and then pulling my implementation to almost all the mobile apps that I write. This is not efficient, and can be done better, right? 🙂

Sharing Azure Active Directory SSO access tokens across multiple native mobile apps

This blog post is the forth and final in the series that cover Azure Active Directory Single Sign-On (SSO) authentication in native mobile applications.

Using Azure AD SSO tokens for Multiple AAD Resources From Native Mobile Apps

This blog post is the third in a series that cover Azure Active Directory Single Sign-On (SSO) authentication in native mobile applications.

Use What is Left of Time in this Year and Start Ticking Some Boxes

](https://i0.wp.com/www.hasaltaiar.com.au/wp-content/uploads/2014/11/to-do-list.jpg)

](https://i0.wp.com/www.hasaltaiar.com.au/wp-content/uploads/2014/11/to-do-list.jpg)

How to Best handle AAD access tokens in native mobile apps

This blog post is the second in a series that cover Azure Active Directory SSO Authentication in native mobile apps.

Implementing Azure Active Directory SSO (Single Sign on) in Xamarin iOS apps

This blog post is the first in a series that cover Azure Active Directory SSO Authentication in native mobile apps.

Installing WordPress Website in a Subfolder on your Azure Website

This blog post shows you how to install a wordpress website in a sub-folder on your Azure website. Now somebody would ask why would I need to do that, and that is a good question, so let me start with the reasons:

Preventing Cross-Site Request Forgery Attacks in a public webApi

Cross-Site Request Forgery (CSRF or Session Riding) is the invocation of unauthorised commands that are triggered by a trusted user. A malicious website could make use of the fact that a user is logged in to a vulnerable website to then ride that session and forge requests. CSRF is a very common type of attack and ASP.NET has had the AntiForgery library for a long time. What’s interesting is when you have a private/public API that your website is using and it is also used by other clients like Powershell, Mobile, etc. In this blog post, I will share my experience in a recent project where a client has engaged us to the address Cross-Site Request Forgery vulnerability.

Managing Certificates in Azure, How Bad can it be?

Azure and I have been friends for quite some time now, and I love the power that Azure gives me. He enables me to spin up a whole enterprise-like infrastructure in seconds. However, when it comes to managing certificates, Azure disappoints me. In a recent project that I have worked on, I got frustrated with some of Azure gotchas when it comes to managing security keys. In this blog post, I will share my experience on these issues.

Mobile Test-Driven Development Part (3) – Running your unit tests from your IDE

TDD in Mobile Development – Part 3

How I reduced the Worker Role time from above 5 hrs to less than 1 hour

This post talks about my experience in reducing the execution time of the Worker Role from above 5 hours to under 1 hour. This Worker Role is set up to call some external APIs to get a list of items with their promotions and store them locally. A typical batch update process that you would see in many apps. Our client was only interested in quick fixes that would help them reduce the time it is taking the Worker Role to run. We were not allowed to change the architecture or make a big change as they had a release deadline in few weeks. So here is what I have done.

TDD for Mobile Development

This post aims at exploring the best practices in terms of code quality and testability for mobile development.

It is part of a series that talks about Unit and Integration Testing in the Mobile space. In particular, I focus on Android and iOS.

How to install Magento on Azure websites

This blog explains in a step-by-step how to install (fresh install or migrate) a Megento package into Azure websites

Getting Tooltip Element in UI Automation Tests using TestStack (White) framework

We use TestStack (White) UI Automation framework for our UI Automation tests, we have few tests that requires getting the error message from the tooltip to validate what is being displayed to the user. These tests were running fine but failing on the Build Server

Getting started with Service Bus (Azure and Windows Server) with Samples

Getting Started with Service Bus

AlertDialogs that Blocks Execution and Wait for input on Andorid (MonoDroid/Xamarin.Android)

This blog post aims at addressing the following:

Loading Drawables by name during runtime on MonoForAndroid

In Android, When trying to load any Drawable, or Layout on Android, you need to previously know the ResourceID.

The Resource Ids are auto-generated during compile time when you build the app. This all works nicely when you are setting on Image drawable or one static layout.

However, the issue arise when you are trying to change a drawable or a layout file during run-time. Sometimes you do not know what the name of the file might, or it could be something that is configurable and could change.

Dropdown list in iOS (iPhone) using MonoTouch

When shifting from a Windows background to an iOS, you start appreciating how easy .NET had made it to add a list control or a drop down to your screen.

In iOS, there is no dropDownList, so you need to make your own implementation.

Capturing Signatures on iphones (iOS) using MonoTouch

In the previous post, I demonstrated how to capture a signature on Android devices.

In this post, we will look at how to do the same thing on iOS devices (iPhones, iPads, etc).

Capturing Signatures on Android Devices (Xamarin.Android)

In this post, I will demo how to capture signatures on Android devices to allow users to use their fingers or a stylus to enter a signature on the Screen.

Barcode scanning on MonoForAndroid

After doing a little search around, I found that ZXing.Net.Mobile the best candidate.

I need to implement this feature on multiple platform and this scanning framework seems to address this issue well enough.

The library is very simple and quite well designed.

Melbourne startup takes on giants like Gumtree & ServiceSeeking

![]()

Room For Improvement Logo</figcaption>

Reading GPS locations updates on iOS in MonoTouch

In previous post, I showed how to read the battery level and status on iOS in MonoTouch. In this post, I will demo a simple way of reading GPS Locations updates in MonoTouch.

Reading Battery Level and Status in iOS using MonoTouch

In a previous post, I showed how to read the Battery level (and other attributes on Android). In this post, I will demo how to do the same thing on iOS.

Detecting Network Connectivity Status changes on Android (MonoForAndroid)

In the previous post, I explained how we can read the battery status and read Android Battery level, Scale, and Status programmatically.

Reading Battery Level and Status on Android (MonoForAndroid)

I was tasked to implement a new feature that involves reading the Battery level and other properties and update the Header for an Android App. The idea was to be able to give a regular indicator to the user (using some icons) about the state of different hardware components and how we are using them in the app. This involves things like (Battery, GPS, Network Connectivities, etc).

Sharing Code across multiple platforms

Recently I have been working on a project to port the code pool of a mobile Logistic product to Android and iOS from its current base (Windows 5.0, 6.0). We spent quite sometime looking for options around and what are the approaches available. Finally we decided to go with Xamarin MonoTouch and MonoDroid. I must say, I have been working with Xamarin products for almost 6 months now, and I find it quite reliable, except the times when it screws up and u need to trace lots of things to find it was a bug in Mono and u reported. But truth to be told, I find MonoDroid a lot better than Developing for Android on Eclipse using ADT. That was a real pain for me 🙁

Cross Platform Xml File Reading/Writing

Recently, I was working on a project where I needed to have a great deal of c# libraries ported to MonoDroid and MonoTouch (for Android and iOS), so that is what I have been spending my time on.

Interviewing your interviewer

Lately, I have been preparing a good deal for interviews and searching for new positions. I have had a good experience in the whole process so I thought I might sum it up in blogging about it so that others could benefit from my experience.